Model Context Protocol (MCP): Providing context to AI

15 Jul 2025

Introduction

Over the past few months, Model Context Protocol (MCP) has been making waves in the AI and developer communities. From being hailed as a revolutionary advancement in Large Language Models (LLMs) to being dismissed as just an API, MCP has sparked a wide range of opinions.

So what exactly is MCP, and why is it important?

In this post, we’ll unpack what MCP is, how it integrates with AI systems like LLMs, and explore real-world use cases showing how it enhances AI applications. Whether you’re a developer building with AI or a business leader exploring smarter automation, understanding MCP could be crucial to unlocking the full potential of your AI integrations.

What Is MCP?

Model Context Protocol (MCP) is an open standard that enables AI systems—particularly LLMs—to access external context in a structured and secure way.

Out of the box, LLMs only know what they were trained on. That training is vast, but often outdated or incomplete when you’re building real-world apps. MCP bridges this gap by allowing LLMs to interact with real-time data, tools, and resources— providing context to your AI model giving them the power to operate with much more relevance and specificity.

At its core, MCP defines three key capabilities that servers can expose to AI systems:

- Tools: These are executable functions—like running a data analysis, executing a machine learning model, or querying an internal system.

- Prompts: Prewritten prompt templates or commands that guide how the LLM should interact with the data or service.

- Resources: Structured or unstructured data—files, logs, tables, analytics outputs—that add context to the model’s reasoning.

Think of MCP as the USB-C of LLM integrations: a standard way to plug any service into an LLM and give it the context it needs.

Integrating MCP: The Problem It Solves

Before MCP, integrating LLMs with real-world services meant stitching together multiple APIs, writing custom glue code, and managing a wide variety of formats and protocols. Each integration was bespoke and often fragile — creating a high barrier for developers who wanted to add meaningful, real-time context to LLMs.

This setup creates several problems:

- Duplication of logic: Each service-LLM interaction must be hand-crafted.

- Maintenance overhead: Custom code breaks as services evolve.

- Poor scalability: Adding new data sources or functionality becomes progressively more complex.

This is the exact integration problem MCP is designed to solve.

How MCP Works: Architecture and Implementation

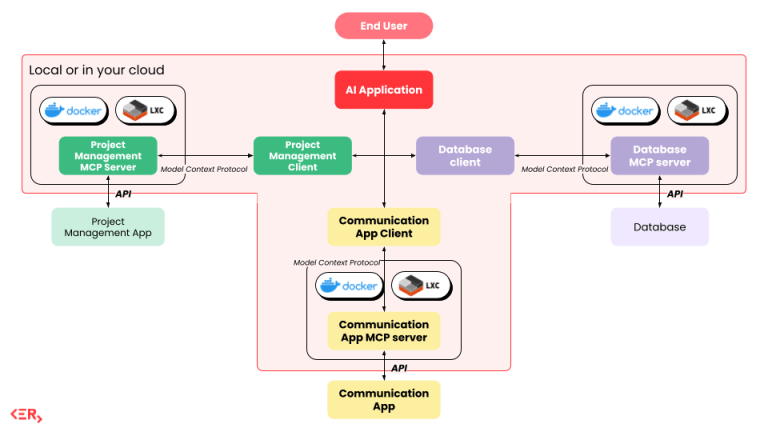

MCP introduces a standardized, modular integration model that separates the application logic from the service logic—making it dramatically easier to build, scale, and manage context-aware AI.

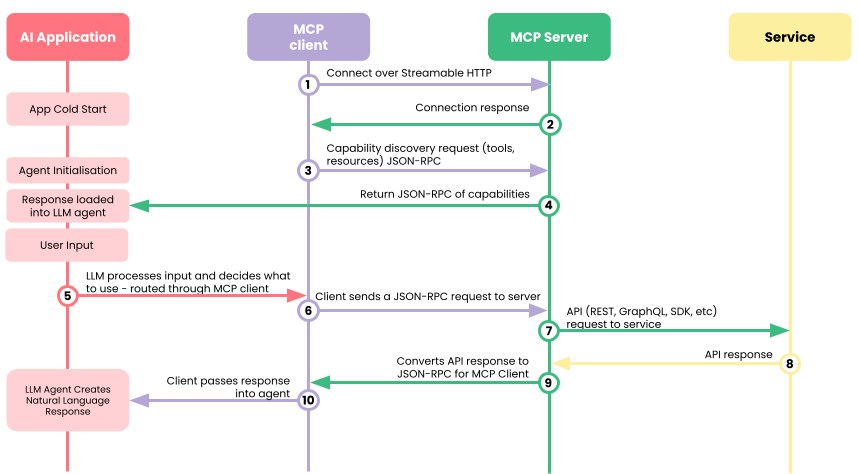

At a high level, MCP follows a client-server architecture:

- The MCP client (your application or user interface) sends and receives requests to LLM and MCP server.

- The MCP server acts as a middle layer between your client and your service. It defines and exposes access to the current capabilities :

- 🔧 Tools — Executable functions, such as running a data model or system query.

- 📂 Resources — Contextual data such as files, tables, logs, or analysis results.

- ✍️ Prompts — Predefined prompt templates or slash-command-style user interactions.

Here’s what this looks like in practice:

As shown above, each service (e.g. a database, internal tool, or SaaS platform) can be wrapped with an MCP server that speaks a unified protocol. Your LLM-powered app connects to these servers via standard interfaces, which makes it easy to teach the AI new capabilities without custom glue code.

MCP clients and servers communicate via two protocols: STDIO and SSE.

- STDIO is simple and ideal for local or small-scale development, especially for quick prototyping or CLI-based tools.

- SSE (Server-Sent Events) is better suited for production and scalable environments, enabling real-time, persistent HTTP connections where servers can push updates to clients.

For our projects, we use SSE to support scalable, long-lived connections over HTTP.

Implementing MCP

To use MCP in your application:

- Deploy or Build an MCP Server – Connect it to your target service (e.g., calendar, Trello, file system). We recommend using SSE to support scalable, persistent connections for your MCP clients.

- Expose Capabilities – Define and register tools, resources, and optionally prompts on the server. These expose what your application can access or execute.

- Extend as Needed – Open-source MCP servers are a great starting point, but you may need custom capabilities. Creating new tools and resources allows your server to serve your unique use cases.

- Connect the MCP Client – Point your client at the MCP server endpoint. The MCP client can now list tools, call them, and read structured results.

- Enrich LLM Prompts – Fetch or generate contextual data via the MCP server, and include it in structured prompts sent to the LLM to guide its reasoning or behavior.

- Test Your LLM Setup – Your AI application is only as strong as the model it uses. Ensure the LLM you’re using can understand and act on tool blocks and structured responses effectively.

This modular pattern allows you to reuse and share integrations, build multiple MCP servers for different services, and confidently scale your AI application over time.

There are already 10,000+ MCP servers available across the community today. While many currently run on local environments (e.g. a developer’s laptop), we’re now seeing a shift toward cloud-hosted MCP servers, enabling more scalable, persistent, and production-grade deployments, without you having to manage your own MCP cloud infrastructure.

What Services and Use Cases Can Benefit from MCP?

As AI continues to evolve, the need for LLMs to operate with real-world context has become increasingly important. MCP is already enabling smarter, more contextual AI across a variety of domains—by making it easier to connect language models to the tools, data, and workflows they need to interact with.

Here are some of the ways organizations are benefiting from MCP today:

🔧 Internal Tools & Automation

Businesses can connect their internal APIs, services, and databases to LLMs using MCP. This allows employees to ask questions like:

“What were sales numbers last quarter?”

“Can you generate the end-of-month report from our internal tool?”

“What does my calendar look like next week?”

“Summarise my emails from yesterday”

Instead of manually querying systems or writing code, the LLM can call tools and access data directly through MCP. This turns the LLM into a powerful internal assistant that works with your existing stack—without needing custom code for every interaction.

📊 Business Intelligence and Analytics

MCP also enhances how teams interact with BI tools and dashboards. By exposing logs, query results, and reporting functions as resources and tools, the LLM can interpret and summarize business performance metrics on demand.

For example, an analyst might ask:

“Compare customer churn in Q1 vs Q2.”

“Pull the top 5 customer complaints from the last month.”

This eliminates the need for deep SQL knowledge or dashboard digging—freeing up time and making insights more accessible across teams.

🧠 Knowledge Management & Documentation

LLMs become significantly more useful when they’re able to reference the most up-to-date information. With MCP, documentation, internal wikis, policies, and code logs can all be surfaced as resources. This allows the model to answer technical, legal, or operational questions with the most current and company-specific knowledge.

Think of it as giving your AI assistant real-time access to your entire knowledge base—without retraining or hardcoding data into the model.

🤖 Building Smarter LLM-Powered Apps

If you’re developing an AI-powered application—like a support agent, coding copilot, or process automation bot—MCP simplifies how you teach that app to interact with external systems. You can expose functionality as tools, structure inputs via prompts, and stream in relevant resources on the fly.

This reduces development effort, promotes reuse, and supports more modular, composable AI application architectures. It also opens the door to multi-agent systems, where multiple LLMs with their own MCP servers can collaborate on complex workflows by sharing structured context.

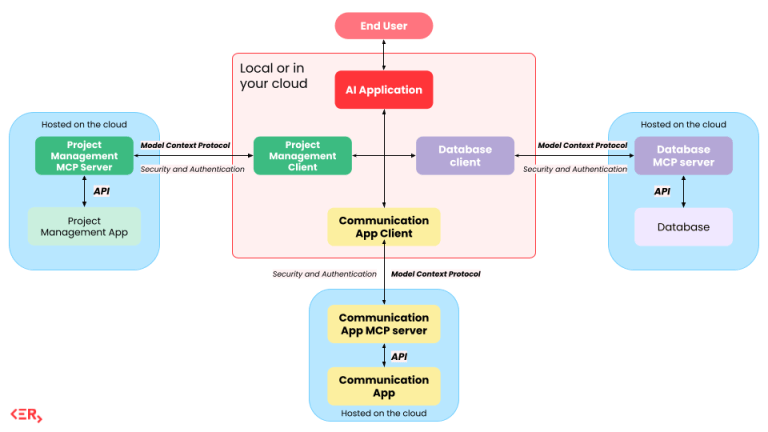

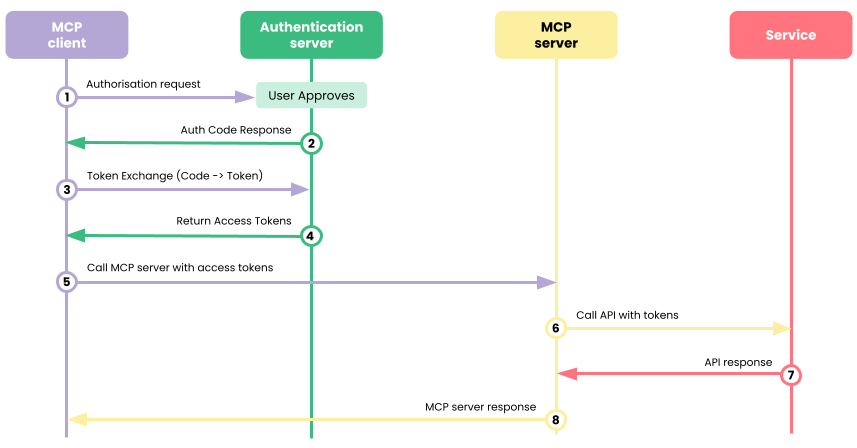

🔐 Security & Access Control: A Growing Priority

As MCP adoption increases and integrations begin to handle sensitive or proprietary data, security and authentication are becoming critical components of the protocol.

Whether you’re connecting an LLM to HR data, customer information, or finance tools, it’s essential to ensure the model only accesses what it’s authorized to see—and nothing more.

The diagram above illustrates how authentication and access control are built into the MCP architecture. Each MCP client is authenticated before being allowed to access the MCP server – i.e any tools, prompts, or resources exposed by a server. Once the client is then authenticated to use the server, requests can be made to the services exposed by the server. This is done through delegating roles via having a dedicated authentication server with credentials being validated by the MCP server and service API call. This ensures:

- Only verified applications or users can request sensitive data.

- Data access is scoped and permissioned by authorisation server logic.

- LLMs operate within a defined, controlled context.

While security capabilities are still maturing, features like token-based auth, fine-grained permissions, and secure server containers are already being implemented. As more MCP servers move to the cloud, these security layers will be key to enabling safe, enterprise-grade deployment of AI applications.

Final Thoughts

MCP isn’t a new AI model or a fancy algorithm—it’s something perhaps more impactful: a standard protocol that makes integrating real-world data and tools with LLMs much simpler and more scalable.

Just like REST APIs enabled the modern web and USB-C standardized device communication, MCP is shaping up to be the integration standard for AI applications.

While there is no new technology in MCP, its integration with existing technology has made this an impact standard for the future of AI applications.

As more MCP servers are hosted in the cloud and security layers mature, we’ll see even broader adoption. The future of LLM-powered apps isn’t just about better models—it’s about smarter context. And MCP is making/has made that possible.

Useful Links

- MCP Introduction – Official Site

- MCP Architecture Guide

- MCP for Beginners – GitHub

- Medium: Complete MCP Tutorial

- YouTube: Intro to MCP

Frequently Asked Questions (FAQs)

1. What is Model Context Protocol (MCP) in AI?

Model Context Protocol (MCP) is an open standard that allows Large Language Models (LLMs) to access real-time tools, data, and prompts—enabling more contextual and capable AI applications.

2. How does MCP improve AI integrations for data and analytics?

MCP simplifies AI integration by standardising how LLMs connect to analytics tools, internal databases, and reporting systems. This allows AI to deliver insights, generate reports, and automate tasks with up-to-date business data.

3. Why is real-time context important for AI models like LLMs?

Out-of-the-box LLMs rely on static training data. With MCP, they can tap into live data sources and tools—making them more relevant, accurate, and useful in dynamic business environments.

4. Who should use MCP—developers or business teams?

Both. Developers benefit from simplified architecture and reusable integrations, while business teams gain smarter AI tools that can handle reporting, automation, and knowledge access with minimal setup.

This blog is written by Jack, assisted by E.R.I.C.A.

About EdgeRed

EdgeRed is an Australian boutique consultancy with expert data analytics and AI consultants in Sydney and Melbourne. We help businesses turn data into insights, driving faster and smarter decisions. Our team specialises in the modern data stack, using tools like Snowflake, dbt, Databricks, and Power BI to deliver scalable, seamless solutions. Whether you need augmented resources or full-scale execution, we’re here to support your team and unlock real business value.

Subscribe to our newsletter to receive our latest data analysis and reports directly to your inbox.