From ETL to ELT: A Data Engineer’s Guide to Choosing the Right Path

24 Sep 2025

Introduction: A Tale of Two Pipelines

For anyone beginning their journey in data engineering, the distinction between ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) can seem like a subtle technicality. However, this difference represents a fundamental evolution in data processing philosophy. As someone who has spent over a decade designing data solutions, particularly in the fast-paced retail sector, I’ve seen firsthand how choosing the right data paradigm isn’t just a technical decision—it’s a strategic one that can define the success of an entire data analytics ecosystem.

This isn’t merely about rearranging acronyms; it’s about adapting to the incredible power of modern data platforms. Understanding the journey from the traditional ETL model to the modern ELT approach is crucial for building scalable, flexible, and future-proof data solutions. This guide will walk you through that evolution and provide a practical framework to help you decide which path is right for your project.

The Classic Approach: What is ETL (Extract, Transform, Load)?

For decades, ETL was the undisputed standard for data integration. Think of it as meticulous preparation before a grand event. The entire process was designed around the limitations of traditional on-premise data warehouses, which had expensive and finite computing power and storage.

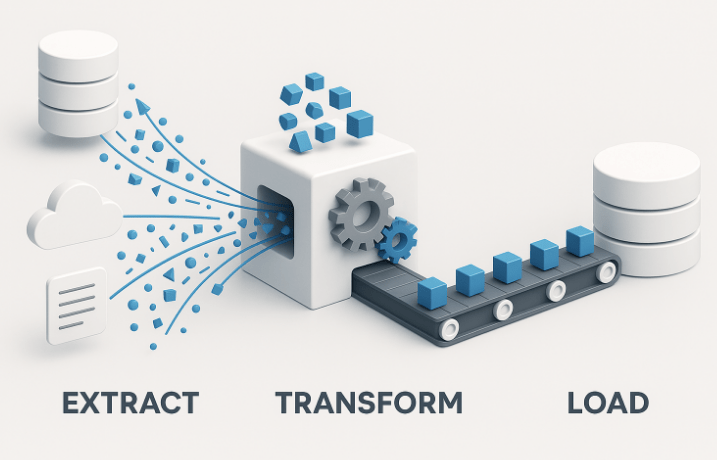

The process follows a rigid, sequential order:

- Extract: Data is pulled from various source systems—such as transactional databases, CRMs, or ERPs.

- Transform: This is where the heavy lifting happens. The extracted data is moved to a separate staging server where it is cleaned, validated, aggregated, and converted into a specific format. Business rules are applied here to shape the data according to predefined requirements.

- Load: Once transformed, the clean, structured data is loaded into the target data warehouse for analysis and reporting.

ETL was born in an era where data was predominantly structured, and analytics use cases were well-defined. The primary goal was to load analysis-ready data into a system that was optimised for querying, not for large-scale transformations.

The Modern Shift: The Rise of ELT (Extract, Load, Transform)

The game changed with the advent of the cloud. The emergence of powerful, scalable cloud data warehouses like Snowflake, Google BigQuery, and Amazon Redshift turned the traditional model on its head. These platforms offer virtually limitless storage and on-demand compute power at a fraction of the cost of their predecessors.

This technological leap gave rise to ELT. Instead of preparing the meal in a separate kitchen, you bring all the raw ingredients directly into a state-of-the-art studio and create your masterpiece on the spot.

The ELT process is as follows:

- Extract: Data is pulled from its source systems, just like in ETL.

- Load: Here’s the key difference. The raw, untransformed data is immediately loaded into the cloud data warehouse. This dramatically reduces the time to get data into the target system.

- Transform: All transformations—cleansing, modelling, and applying business logic—happen within the data warehouse itself, leveraging its massive parallel processing power. Tools like dbt (Data Build Tool) have become central to the modern ELT workflow, allowing engineers to manage these in-warehouse transformations with version control, testing, and documentation.

This approach offers incredible flexibility. Analysts and data scientists can access raw data, and transformations can be adapted on the fly as business requirements evolve, without needing to re-architect the entire ingestion pipeline.

The Strategic Choice: How to Decide Between ETL and ELT

So, how do you choose? The answer depends entirely on your project’s context. Here’s a framework to guide your decision.

When to Consider Traditional ETL:

While ELT is the modern standard for many use cases, ETL remains the superior choice in specific scenarios:

- Strict Data Compliance and Privacy: If you need to mask, encrypt, or remove personally identifiable information (PII) before it lands in your data warehouse, ETL’s pre-load transformation stage is a necessity.

- Legacy Systems and Limited Compute: If you’re working with an on-premise data warehouse that lacks the power for large-scale transformations, performing them in a dedicated staging environment is more efficient.

- Small, Well-Defined Datasets: For smaller datasets with fixed, unchanging transformation logic, the simplicity and predictability of an ETL process can be more straightforward to manage.

When to Embrace Modern ELT:

ELT is the default choice for most modern data architectures for several compelling reasons:

- Leveraging a Cloud Data Warehouse: If your infrastructure is built on a powerful platform like Snowflake, BigQuery, or Redshift, you should use its compute engine for transformations.

- Need for Speed and Agility: ELT allows for incredibly fast data ingestion, making data available for analysis much quicker. It provides the flexibility to create new data models as business questions arise.

- Big Data and Diverse Data Types: When dealing with large volumes of structured, semi-structured (e.g., JSON, XML), and unstructured data, ELT is far more capable. You can load everything first and decide how to parse and model it later.

- Fueling Data Science: Machine learning models often perform better when trained on raw, granular data. ELT preserves this raw data, making it readily available for data scientists.

Challenges and Considerations

Neither paradigm is a silver bullet. It’s important to be aware of the challenges:

- ETL Challenges: The primary drawbacks are its rigidity and potential for bottlenecks. If a new data source or a change in business logic is needed, the entire transformation process often needs to be re-engineered, which is slow and costly.

- ELT Challenges: The greatest risk with ELT is the potential to create a “data swamp”. Dumping massive amounts of raw, ungoverned data into a data lake or warehouse without proper documentation and management can lead to chaos. A strong data governance strategy and meticulous in-warehouse transformation management (using tools like dbt) are critical for success.

Concluding Thoughts

The evolution from ETL to ELT is more than just a technical trend; it’s a strategic response to the possibilities unlocked by the cloud. It reflects a shift from a world of data scarcity to one of data abundance. While the modern ELT approach offers the flexibility and scalability needed for today’s data-driven world, the traditional ETL process still holds its ground in specific, compliance-heavy, or legacy contexts.

As a data engineer, mastering both paradigms—and, more importantly, understanding when to apply each—is fundamental to designing robust and effective data solutions that truly empower an organisation.

This post was written by Arun.

About EdgeRed

EdgeRed is an Australian boutique consultancy with expert data analytics and AI consultants in Sydney and Melbourne. We help businesses turn data into insights, driving faster and smarter decisions. Our team specialises in the modern data stack, using tools like Snowflake, dbt, Databricks, and Power BI to deliver scalable, seamless solutions. Whether you need augmented resources or full-scale execution, we’re here to support your team and unlock real business value.

Subscribe to our newsletter to receive our latest data analysis and reports directly to your inbox.